Queuing theory basics part 1: single server

These are my notes for the following three lectures on queueing theory:

In a queueing model, people arrive at the queue, wait in line, and then are serviced by a server.

There may be a single server or multiple servers (sharing a single line).

The queue capacity may be finite or infinite.

In a finite queue, people may be unable to join the queue if it is full.

Arrivals and servers are each modeled by a distribution over their rates.

- Arrivals are typically modeled by a Poisson distribution with rate λ (e.g., 4 per hour).

- Service times are typically modeled by an exponential distribution with rate μ (e.g., 5 per hour).

The mean service time is then μ1.

In the following, we will assume that arrivals and servers follow a Poisson and exponential distribution, respectively.

These two distributions are memoryless,

meaning that arrivals are independent of one another, as are service times.

Note that if we have an infinite queue capacity and λ>μ, then the queue will grow indefinitely.

The queueing discipline determines which person in line gets serviced next.

An example queueing discipline is first-in, first-out (FIFO),

where people are serviced in the order in which they arrive.

Steady-state behavior is often the same regardless of queueing discipline.

In the following, we will assume a FIFO queueing discipline.

Notation

Queues can be specified in Kendall's notation.

The notation is A/S/c/K/N/D, where

- A = arrival process, with "M" denoting a memoryless process.

- S = service time distribution, with "M" denoting a memoryless distribution of service time.

- c = number of servers

- K = queue capacity

- N = population size (total population from which arrivals are drawn)

- D = queueing discipline, e.g., FIFO

For example, a M/M/1/∞/∞/FIFO queue

is a memoryless queue

with 1 server,

infinite queue capacity,

infinite population,

and FIFO queueing discipline.

If unspecified, we assume K/N/D is ∞/∞/FIFO.

For example, M/M/1 = M/M/1/∞/∞/FIFO.

Single server, infinite queue (M/M/1)

Let's consider the case of a queue with infinite capacity and a single server.

Assume that arrivals are Poisson distributed with rate λ

and service times are exponentially distributed with rate μ.

Distribution of queue lengths

In a Poisson distribution, no two events occur at the same instant.

Let h be an infinitesimal interval in which exactly 0 or 1 event occurs.

Let pn(t) to be the probability that n people are in the system (waiting in line or being serviced) at time t.

Then

pn(t+h)=++P(1 arrival, 0 service)⋅pn−1(t)P(0 arrival, 1 service)⋅pn+1(t)P(0 arrival, 0 service)⋅pn(t).

As h is defined to be the interval in which at most 1 event occurs,

there is no P(1 arrival, 1 service)⋅pn(t) term.

In a Poisson distribution, the probability of k events occurring in an interval τ is

P(k events in interval τ)=f(k;τ)=eλτk!(λτ)k.

This distribution has mean E[k]=∑k≥0kf(k;τ)=λτ.

As we have defined h to be an interval with at most 1 event,

P(1 event)=0⋅P(0 events)+1⋅P(1 event)=Ef(k;h)[k]=λh

Therefore

pn(t+h)=++λh⋅(1−μh)⋅pn−1(t)(1−λh)⋅μh⋅pn+1(t)(1−λh)⋅(1−μh)⋅pn(t).

To first order in h,

pn(t+h)≈λhpn−1(t)+μhpn+1(t)+(1−λh−μh)pn(t),

thus

hpn(t+h)−pn(t)=λpn−1(t)+μpn+1(t)−(λ+μ)pn(t).

At steady state, we expect a unchanging distribution of queue lengths, so

0=hpn(t+h)−pn(t)⟹λpn−1+μpn+1=(λ+μ)pn.(1)

When the queue is empty, no service occurs ( P(0 service)=1), so

p0(t+h)=P(0 arrivals, 1 service)⋅p1(t)+P(0 arrivals, 0 service)⋅p0(t)=(1−λh)⋅μh⋅p1(t)+(1−λh)⋅1⋅p0(t)≈μh⋅p1(t)+(1−λh)p0(t).

Hence, at steady state,

0=hp0(t+h)−p0(t)=μp1(t)−λp0(t)⟹μp1=λp0.(2)

By (1) ( n=1 ) and (2),

λp0+μp2=(λ+μ)p1=λp1+μp1=λp1+λp0⟹p2=μλp1=(μλ)2p0.

Let ρ=μλ.

By a similar argument, pn=ρnp0 for all n≥1.

As this is a probability distribution,

1=n≥0∑pn=p0n≥0∑ρn=p0⋅1−ρ1⟹p0=1−ρ.

Therefore

pn=ρnp0=ρn(1−ρ).(3)

Expected queue length and wait time

Using the distribution of the number of people in the system (3),

we can derive the expected number of people in the system

(recall that ρ=μλ):

Ls=Ep[n]=n≥0∑nρn(1−ρ)=(1−ρ)ρn≥1∑nρn−1=(1−ρ)ρdρdn≥1∑ρn=(1−ρ)ρdρd1−ρ1=(1−ρ)ρ(1−ρ)21=1−ρρ.

The expected number of people being serviced

is the expected proportion of time the system is nonempty,

i.e., 1−p0=1−(1−ρ)=ρ.

Thus the expected number of people waiting to be serviced is Lq=Ls−ρ.

The expected time spent in the system (including time being serviced)

is related to the queue length by Little's law:

Ls=λWs.

A similar relation applies to the expected time spent waiting to be serviced:

Lq=λWq.

The utilization is the probability that the server is busy:

U=1−p0=1−(1−ρ)=ρ.

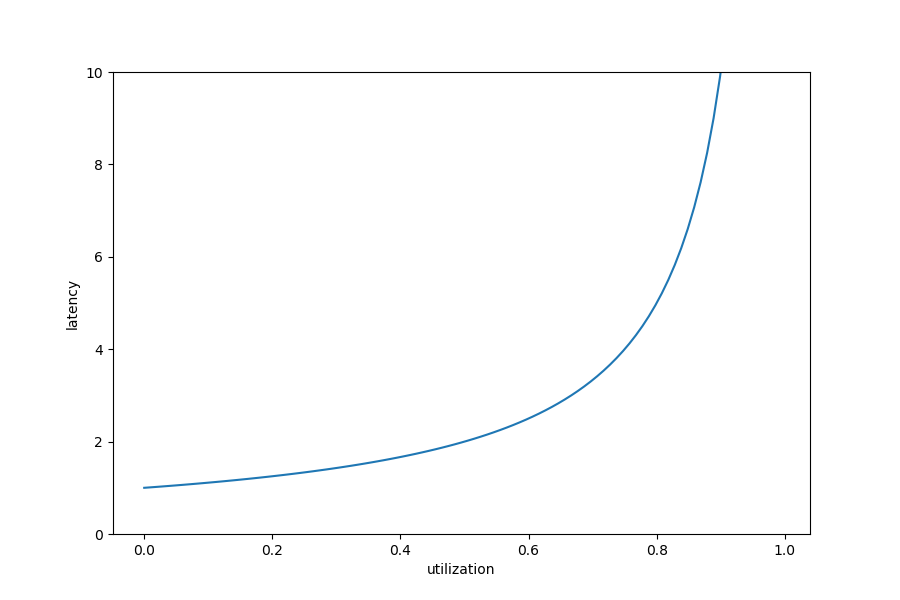

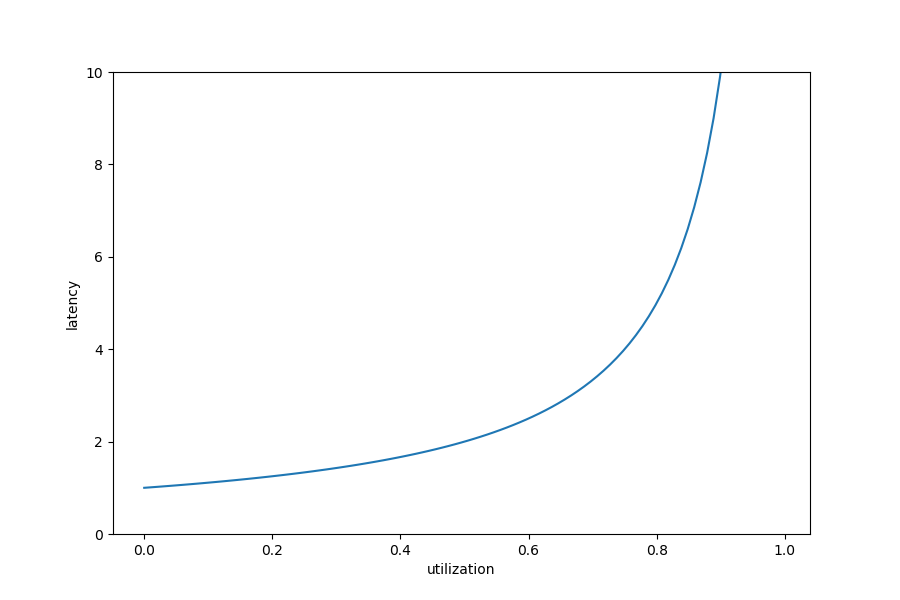

If we plot the service time (i.e., latency) with respect to utilization,

we see that it begins increasing rapidly around U=0.8:

import matplotlib.pyplot as plt

import numpy as np

rho = np.linspace(0, 1, 100)

latency = 1/(1-rho)

plt.figure(figsize=(9, 6))

plt.ylim(0, 10)

plt.xlabel('utilization')

plt.ylabel('latency')

plt.plot(rho, latency)

plt.savefig('mm1-latency.png')

plt.show()

Single server, finite queue (M/M/1/N)

The recurrence for pn=ρnp0,n≤N remains the same,

and we can use it to compute p0:

1=n=0∑Npn=n=0∑Nρnp0=p0⋅1−ρ1−ρN+1⟹p0=1−ρN+11−ρ.

With this, we can compute the expected number of people in the system:

Ls=n=0∑Nnpn=n=0∑Nnρnp0=p0ρn=0∑Nnρn−1=p0ρdρdn=0∑Nρn=p0ρdρd1−ρ1−ρN+1=p0ρ⋅(1−ρ)21+NρN+1−(N+1)ρ.

A similar calculation may be performed for Lq,

or we can reason through it as follows:

As people are unable to join the queue when it is full,

there is an effective arrival rate λeff<λ.

A person may only join the queue if there are fewer than N others in the system,

which happens with probability 1−pN.

Thus λeff=λ(1−pN).

From this, we can compute the expected queue length, service time, and wait time:

Lq=Ls−μλeff,Ws=λeffLs,Wq=λeffLq.

Continued in part 2...