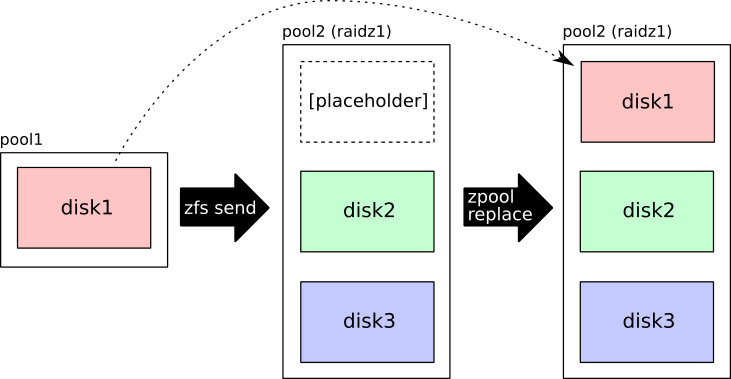

Migrating a nonredundant zpool to raidz1 (without a spare disk)

I had a zpool pool1 backed by a single 3TB disk. I ordered two 4TB disks and wanted to migrate the data from pool1 to a new raidz1 zpool pool2 backed by the 3TB disk and the two 4TB disks. Here's how.

Create a zpool with the two 4TB disks and a placeholder file in place of the 3TB disk:

$ sudo fdisk -l /dev/dev/disk/by-id/wwn-0x5000000000000001

Disk /dev/disk/by-id/wwn-0x5000000000000001: 2.75 TiB, 3000592942592 bytes, 5860533091 sectors

$ sudo truncate -s $((3000592942592-1024**3)) /fakedisk

$ sudo zpool create \

> -O compression=on \

> -O encryption=on \

> -O keyformat=passphrase \

> -O keylocation=file:///etc/cryptfs.key \

> -f \

> pool2 raidz1 /fakedisk /dev/disk/by-id/wwn-0x500000000000000[23]

$ sudo zpool offline pool2 /fakedisk

$ sudo rm /fakedisk

Some notes:

- When ZFS is using a whole disk rather than a file or partition, it applies its own partitioning scheme that uses some of the space on the disk. I made the placeholder file 1GiB smaller than the actual disk as padding for the space used by ZFS. The vdev will be expanded to use the full space later.

- I'm using ZFS 0.8.x encryption on the new zpool. This is optional.

- The WWN is a stable identifier that uniquely identifies the disk. It typically encodes the manufacturer and serial number.

wwn-0x..1is the old 3TB disk andwwn-0x..[23]are the two new 4TB disks. - The

-fis required to allow disks and files to be mixed in the same raidz group.

Here's what the zpool looks like after creation:

$ zpool list pool2

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

pool2 8.17T 1.20M 8.17T - 2.72T 0% 0% 1.00x DEGRADED -

$ zfs list pool2

NAME USED AVAIL REFER MOUNTPOINT

pool2 559K 5.27T 128K /pool2

The total zpool size is about 9TB (8.17TiB): one 3TB disk and 3TB from each 4TB disk. The expandsize is 1TB+1TB+1TB=3TB (2.72TiB) because that is how much the total zpool size would increase if the 3TB disk were replaced with a 4TB disk. The root ZFS dataset shows 3TB+3TB=6TB (5.27TiB) available because every 2 blocks written to the zpool are encoded into 2 data blocks and 1 parity block, i.e., 1/3 of the space is dedicated to redundancy.

Normally zfs send -R would be sufficient to move the data over to the new zpool, but because I'm migrating from a zpool encrypted with dm-crypt (i.e., no encryption at the ZFS layer) to a ZFS-encrypted zpool, it is not an option. Instead, I created two helper scripts to send all snapshots and datasets:

#!/bin/bash

# send-snapshots

set -eux

src="$1"

dst="$2"

first="$(zfs list -Hr -o name -t snapshot "$src" | head -1)"

last="$(zfs list -Hr -o name -t snapshot "$src" | tail -1)"

zfs send "$first" | zfs recv -u "$dst"

zfs send -I "$first" "$last" | zfs recv -u "$dst"

zfs mount "$dst"

#!/bin/bash

# send-datasets

set -eux

src="$1"

dst="$2"

zfs list -Hr -t filesystem,volume -o name "$src" \

| tail +2 \

| cut -d/ -f2 \

| while read -r ds; do ./send-snapshots "$src/$ds" "$dst/$ds"; done

Send the data:

$ chmod +x send-snapshots send-datasets

$ sudo ./send-datasets pool1 pool2

Verify that the contents of pool2 are as expected.

Destroy pool1 and replace the placeholder file in pool2 with the 3TB disk from pool1. The -f is required because ZFS will see the label on the old disk and halt the replacement as a safety measure.

$ sudo zpool destroy pool1

$ sudo zpool replace pool2 /fakedisk /dev/disk/by-id/wwn-0x5000000000000001

The zpool will begin resilvering:

$ zpool status pool2

pool: pool2

state: DEGRADED

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress [...]

config:

NAME STATE READ WRITE CKSUM

pool2 DEGRADED 0 0 0

raidz1-0 DEGRADED 0 0 0

replacing-0 DEGRADED 0 0 0

/fakedisk OFFLINE 0 0 0

/dev/disk/by-id/wwn-0x5000000000000001-part1 ONLINE 0 0 0

/dev/disk/by-id/wwn-0x5000000000000002-part1 ONLINE 0 0 0

/dev/disk/by-id/wwn-0x5000000000000003-part1 ONLINE 0 0 0

errors: No known data errors

Eventually the zpool will reach a healthy state:

$ zpool status pool2

pool: pool2

state: ONLINE

scan: resilvered [...]

config:

NAME STATE READ WRITE CKSUM

pool2 ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

/dev/disk/by-id/wwn-0x5000000000000001-part1 ONLINE 0 0 0

/dev/disk/by-id/wwn-0x5000000000000002-part1 ONLINE 0 0 0

/dev/disk/by-id/wwn-0x5000000000000003-part1 ONLINE 0 0 0

errors: No known data errors

Now that the actual 3TB disk is present in pool2, we can expand the vdevs to use the entire disk:

$ zpool list pool2

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

pool2 8.17T 2.21T 5.96T - 2.72T 0% 27% 1.00x ONLINE -

$ zpool online -e pool2 /dev/disk/by-id/wwn-0x500000000000000[123]

$ zpool list pool2

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

pool2 8.17T 2.21T 5.96T - - 0% 27% 1.00x ONLINE -